Speech Synthesis for Virtual Assistants

Home » Case Study » Speech Synthesis for Virtual Assistants

Project Overview:

Objective

The “Speech Synthesis for Virtual Assistants” project is designed to create a comprehensive dataset for training advanced machine learning models to generate human-like speech for virtual assistant applications. This dataset will enable the development of more natural and intelligible virtual assistants, enhancing user experiences across a variety of platforms and domains.

Scope

This project involves collecting recorded speech data from multiple sources, including professional voice actors, public domain speech datasets, and user-generated speech, and annotating them with transcriptions and linguistic attributes.

Sources

- Voice Actors: Collaborate with professional voice actors to record a wide range of scripted dialogues and phrases in different accents and tones.

- Public Domain Datasets: Access publicly available speech datasets that contain a diverse range of linguistic content.

- User Contributions: Encourage user contributions by allowing individuals to submit their recorded speech for virtual assistant development.

Data Collection Metrics

- Total Speech Recordings: 50,000 recordings

- Voice Actors: 30,000

- Public Domain Datasets: 10,000

- User Contributions: 10,000

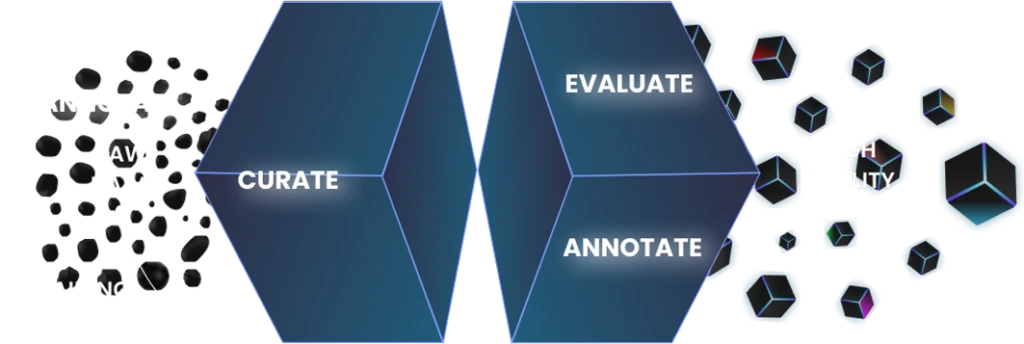

Annotation Process

Stages

- Transcription: Annotate each speech recording with accurate transcriptions to capture the spoken words and phrases.

- Linguistic Attributes: Log metadata, including attributes like accent, gender, tone, and emotion expressed in the speech.

Annotation Metrics

- Speech Recordings with Transcriptions: 50,000

- Linguistic Attributes Logging: 50,000

Quality Assurance

Stages

Annotation Verification: Implement a validation process involving linguistic experts to review and verify the accuracy of transcriptions and linguistic attributes.

Data Quality Control: Ensure the removal of low-quality or noisy recordings from the dataset.

Data Security: Protect sensitive information and maintain the privacy of user-contributed speech data.

QA Metrics

- Annotation Validation Cases: 5,000 (10% of total)

- Data Cleansing: Remove low-quality recordings

Conclusion

The “Speech Synthesis for Virtual Assistants” dataset is a pivotal resource for enhancing virtual assistant technology. With a diverse collection of high-quality speech recordings, accurate transcriptions, and comprehensive linguistic attributes, this dataset empowers developers to create virtual assistants that can communicate naturally and effectively with users. It lays the foundation for the development of advanced speech synthesis models that can revolutionize the virtual assistant industry, providing more engaging and helpful virtual interactions across various applications and domains.

Quality Data Creation

Guaranteed TAT

ISO 9001:2015, ISO/IEC 27001:2013 Certified

HIPAA Compliance

GDPR Compliance

Compliance and Security

Let's Discuss your Data collection Requirement With Us

To get a detailed estimation of requirements please reach us.