Content Moderation for User-Generated Content Platforms

Home » Case Study » Content Moderation for User-Generated Content Platforms

Project Overview:

Objective

In the realm of user-generated content platforms, our mission is to offer comprehensive data collection and annotation services. We specialize in gathering diverse datasets, including images, videos, text, and speech, which are instrumental in training sophisticated machine learning models. Our goal is to enrich AI capabilities while maintaining a balance between content freedom and effective moderation, thereby fostering a vibrant user experience.

Scope

Sources

- AI and Machine Learning Algorithms: By utilizing automated algorithms, platforms can effectively detect and filter out inappropriate or harmful content. Consequently, these advanced technologies help maintain a safe and respectful online environment.

- Human Moderators: Furthermore, employing human moderators who review and assess user-generated content ensures that it complies with platform guidelines and community standards. In conjunction with AI, human oversight adds a crucial layer of judgment and context that automated systems may lack.

Data Collection Metrics

- Volume: To date, we have successfully collected 1.5 million data points and annotated 1.2 million items across various formats.

- Completeness: We ensure every dataset is exhaustive, covering all required elements.

- Accuracy: Our commitment to precision guarantees the reliability and correctness of the data we gather.

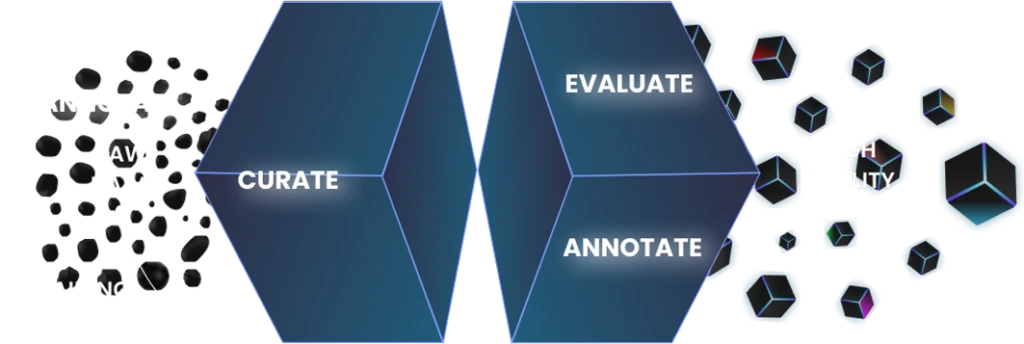

Annotation Process

Stages

- Content Submission: We receive an array of user-generated content, ranging from text and images to videos.

- Automated Filtering: Subsequently, leveraging cutting-edge algorithms, we efficiently categorize and sort this content.

- Human Review: Following this, our team of skilled moderators meticulously reviews the content, ensuring it aligns with stringent quality standards.

- Moderation Decision: Based on this thorough review, we then make informed decisions regarding content suitability, carefully balancing freedom and appropriateness.

- User Notifications: Finally, transparency is key – we keep users informed about the status of their content, thereby promoting accountability.

Annotation Metrics

- Precision & Recall: We excel in accurately identifying inappropriate content.

- False Positives & Negatives: Our systems are fine-tuned for optimal content filtering efficiency.

- User Feedback: We align our processes with user expectations, reflected in low appeal rates.

Quality Assurance

Stages

Data Quality: We deliver data that is not just comprehensive but also timely, ensuring reliable AI insights.

Privacy Protection: Upholding standards like GDPR and CCPA, we protect personal data diligently.

Data Security: Our robust security measures, including encryption and firewalls, safeguard sensitive information.

QA Metrics

- Defect Density: We constantly monitor and minimize defects in our products and software.

- Privacy Compliance: Regular audits ensure our data handling remains compliant and secure.

Conclusion

The use of advanced algorithms, combined with human moderation, helps filter out harmful or inappropriate content, creating a safer and more enjoyable environment for users. However, the challenge lies in striking a balance between content freedom and moderation, as well as adapting to evolving forms of content and emerging ethical concerns. As user-generated content continues to grow, the role of content moderation remains critical in upholding community standards, protecting users, and ensuring the long-term success and reputation of these platforms

Quality Data Creation

Guaranteed TAT

ISO 9001:2015, ISO/IEC 27001:2013 Certified

HIPAA Compliance

GDPR Compliance

Compliance and Security

Let's Discuss your Data collection Requirement With Us

To get a detailed estimation of requirements please reach us.