Any machine learning model is built on data. Training the machine with a tonne of high-quality data is crucial for its success. Any data scientist will concur that collecting too much data is preferable to doing so, especially for computer vision applications that depend on acquiring visual data in the form of pictures and videos. Because of this, gathering data is an essential phase in a machine-learning model’s life cycle.

To teach computers to actually “see” and complete their duties, machine learning relies heavily on the acquisition of video data. For artificial intelligence (AI) and machine learning models to get smarter, more precise, and more adept at identifying all the aforementioned content, enormous amounts of varied data types, including data collection for AI/Ml models, are gathered and annotated.

What does video data collection exactly mean?

To help a machine learning model reduce the danger of bias, the datasets needed for video annotation projects should include a variety of representations, such as demographics, lighting conditions, and background noise, among others.

GTS Video Data Collection practice to train AI/ML

The world of artificial intelligence and machine learning (AI and ML) models, those amazing engines of computational magic, depends on the careful gathering of video data in addition to algorithmic wonders. We set out on a quest for manual video data-gathering techniques in this world, where confusion and burstiness coexist, to reveal the creativity involved in teaching these technological wonders.

Manually annotated videos

Each frame is carefully annotated by humans to identify objects, events, and settings. To provide rich data for model training, annotators meticulously assign descriptive tags.

Dividing up a video

Expert human division of Video Data Collection footage into meaningful sections, defining different events or actions. Data segmentation facilitates data organization, enabling models to understand temporal linkages and context.

Removing keyframes

The essence of each scene is captured through the careful selection of exemplary frames by human curators. Keyframes are essential points of reference for models to comprehend the narrative and visual material.

Recording transcription

Accurate transcription of the video’s spoken words done by humans. To support multimodal learning, transcriptions offer text data collection for AI/ML models information that is consistent with audiovisual content.

Analyzing the video’s quality

Human reviewers give careful consideration to aspects of video Data Collection such as clarity, lighting, and resolution.

Adding to a video

Human specialists manipulate data deftly to add variants and expand the dataset. Transformations, overlays, and alterations are examples of augmentation procedures that broaden the diversity of data.

These techniques emphasize how important human expertise is in the training of AI and ML models. As AI/ML models develop, Video Data Collection the merging of human-directed procedures and cutting-edge algorithms opens up new aspects and paves the way for more sophisticated and perceptive machine intelligence.

Why does gathering Video Data Collection for machine learning matter?

For the autonomous vehicles

For instance, if you want your self-driving car to recognize pedestrians on its own, you’ll need Video Data Collection from various angles so the model can tell which items are humans and which ones aren’t. Using this information, experts can program the machine to distinguish between people and common items, comprehend traffic laws, steer clear of probable mishaps, and arrive at its destination safely.

Virtual reality(VR) and Augmented reality(AR)

To train new staff and develop immersive marketing experiences, firms are already experimenting with VR. Utilize VR and AR to generate synthetic data, providing diverse scenarios for training robust AI/ML models. Capture user interactions and gestures in VR/AR environments to enrich datasets for immersive AI/ML training.

Technology in stores

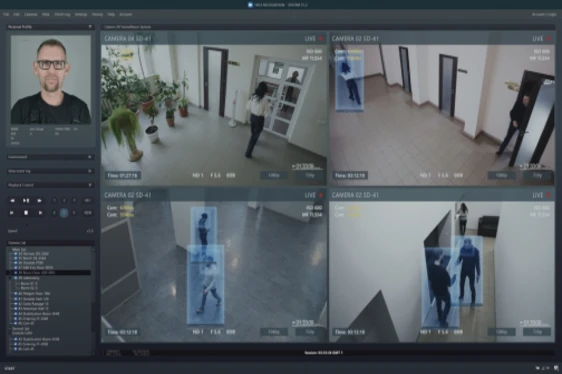

Incorporate IoT sensors and devices to complement video data, providing contextual information for AI/ML model training. Risk analysis and theft tracking are two more prominent uses of Video Data Collection in the retail industry. Deploy multiple cameras strategically in stores to capture various angles and perspectives, enhancing the richness of collected video data.

What difficulties do you encounter when gathering video data?

Collection expenses

Although it is increasingly simple to record films on cellphones, the recordings may only have a low resolution. Data collectors must therefore purchase pricey cameras to record high-quality audio.

Consuming a lot of time

Since videos require more time to record than image datasets for machine learning do, gathering video data can take longer than usual. For example, if a CV-enabled security monitoring system demands that data be Video Data Collection at a specified time of the day (dawn, for example), then such data will require substantially more time to acquire than data collected during the day.

This is so that the data collector can record the movies within a specific time interval. However, shooting images takes a lot less time than filming videos, so this problem might also apply to the collecting of image data.

Data collecting that is impartial and diverse

According to a study, computer vision systems are remarkably effective at identifying pedestrians with pale skin tones. If the system doesn’t recognize persons with varied skin tones, Video Data Collection kind of prejudice in driverless vehicles might be disastrous.

The Bottom line

To achieve a model’s objectives, it is crucial to train a machine learning model effectively. The ability and effectiveness of a model to comprehend the input environment and arrive at the best judgments depend on the quality of the dataset.

Monitoring model accuracy and fine-tuning Video Data Collection The training approach are ongoing processes to keep model performance in line with real-world conditions after the model has reached the anticipated accuracy levels.