The perfectly selected collection of digital images that are used to train, test, and assess how well machine learning algorithms perform is known as an image classification dataset. The photos must be of high quality, variety, and multi-dimensionality because the algorithms learn from the datasets’ example images. With the help of this high-quality image, we can create a training dataset of the same caliber, which will help us make decisions more quickly and with greater accuracy. To improve the classification outcomes, choosing a trustworthy training dataset is essential.

A dataset in computer vision is a carefully maintained collection of digital images that programmers use to test, train, and assess how well their algorithms work. According to this claim, the algorithm picks up new information from the dataset’s instances.

Why Is an Image Dataset Necessary for Machine Learning?

A dataset is a series of instances used to develop and evaluate a model. The examples here could be drawn from a specific field or subject, however, datasets are typically created to support a variety of uses. The abundance of labels in datasets makes them perfect for developing and testing supervised models. Unlabeled image datasets for machine learning are also available for training unsupervised models.

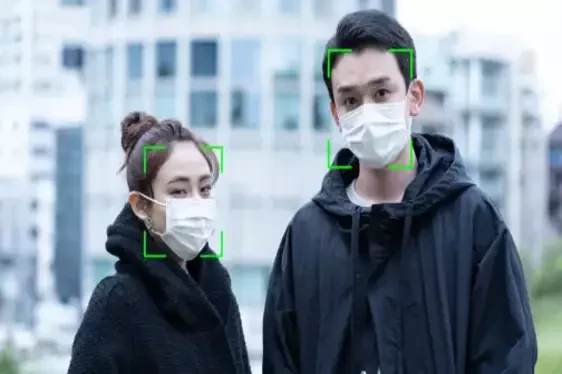

It’s important to avoid using training instances in testing because the model prediction is based on what it already knows. “Overfitting” the model is the perfect word for describing this situation. The training and testing components of datasets should be separated to address the issue. This procedure involves choosing a subset of a dataset, say 70% of the face recognition data collection, and let a machine learning algorithm train on it. The remaining 30% of the dataset, which are unobserved examples, may then be chosen by data scientists and used to test the model’s training.

How to build datasets of images for Machine Learning models

Although many models have been pre-trained to recognize specific things, you will typically need to complete further training, similar to the video data collection for AI and ML Models. To accomplish this, you must create image classification datasets with various labeled images that correspond to the kind of images your final model will be predicting, and GTS will help you out in the best possible manner by implementing all the necessary methods.

Package installation

While you can create a scraper using Scrapy, Selenium, or Beautiful Soup, there are already pre-built Python packages available to save you time and avoid creating something from scratch. At GTS we are collecting our image data, our large data collection team helps us in getting more and more data from person to person by visiting different countries and cities.

Scrub the pictures

Eliminate duplicate images within the dataset to prevent bias and redundancy, ensuring balanced training data for more accurate models. Verify and correct labels to maintain dataset integrity, enhancing model performance and reducing potential errors during training and inference.

Make a list of the data you need to find

Target any specific thing to create a list, like for instance the list of scientific names of every British butterfly species. Whatever you are passionate about, just create a list of that for the photographs. List the search terms you’ve determined to use.

Brush up the images

The only thing left to do is to loop through each butterfly in the list of butterflies and call get_images() with the butterfly’s species name as the search argument. Following a search for each species, the function will scrape the results and store the first 50 hits in a directory with the species name.

The image collection team of GTS will specifically look for the required image data set and do their research accordingly for the fulfillment of the data required in the Machine learning model. Once you are satisfied with them, the following step is to prepare them for your model by scaling, augmenting, and dividing them into training and test datasets.

Labeling image data for ML

These are the steps to label images for computer vision model training.

Identify the kind of data you require for model training

This will determine the kind of data labeling task you complete. Collect a wide range of images representing various objects, scenes, and conditions to ensure model robustness and generalization.

Specify the attributes of the labeled data your model requires

You must establish classes for an image classification task. Each labeled data point should specify the category or class of the object present in the image, facilitating classification tasks.

Choose how much of each sort of labeled data you require

You must be aware of how much of each sort of data you require to train a fair and impartial ML model before you begin gathering and labeling text data collection for AI and ML models.

Select the ideal training data labeling method

There are two main approaches: automation and human data labeling. Although it takes longer and costs more money, human labeling is usually more accurate. There are crowdsourcing, outsourcing, and internal labeling options if you decide you need human involvement.

Break down the task of labeling

You must divide your image labeling task into manageable parts. If you choose to use human data labeling as a means of ensuring high-quality outcomes.

Compose detailed directions

Your labeling instructions should be as simple and unambiguous as possible to increase the process’ overall dependability. Things that you may think are obvious may not always be so to others. Write clear, detailed directions, include examples, and anticipate typical errors.

Implement quality assurance.

Plan for how you’ll make sure that the labeled data is of high quality. This typically means that you must develop a pipeline or a set of labeling and verification stages. your image labeling process or speech data collection for AI/ML models. As an illustration, assign your object identification work to two groups of individuals. The first group will determine whether the desired object is there in the image. The second group will select the object.

A Study on Transfer Learning Using Trained Image Models

To avoid having to train a new model from scratch, transfer learning uses feature representations from a previously trained model. Now let’s look at some strategies for putting transfer learning into practice.

Obtaining the pre-trained model

Get the pre-trained model that you want to utilize for your problem as a first step. To obtain pre-trained models, access repositories like TensorFlow Hub or PyTorch Hub. Alternatively, train models on large-scale datasets like ImageNet for transfer learning applications.

Make a foundation model

Utilizing one of the architectures, the initial step is typically to detect the underlying model. The pre-trained weights are also available as an optional download.

Keep in mind that the final output layer of the base model typically contains more units than you need. You must consequently eliminate the final output layer while building the basic model.

Including new trainable layers

The next stage is to include new trainable layers. That will translate the previously used features into forecasts for the fresh dataset.

Final thoughts

The creation and use of image datasets for machine learning is a critical component in creating reliable models. These datasets serve as the backbone for training robust models capable of recognizing patterns, objects, and scenes. Quality curation ensures diverse representation, enhancing model generalization and performance across various applications. Additionally, continual refinement of datasets is crucial for keeping pace with evolving trends and challenges. By adhering to best practices in dataset construction and utilization, researchers and practitioners can unlock the full potential of machine learning in image-related tasks, from object detection and classification to image generation and enhancement, driving innovation across industries.